Scalable Deep Learning via I/O Analysis and Optimization

- Virginia Polytechnic Inst. and State Univ. (Virginia Tech), Blacksburg, VA (United States)

- Argonne National Lab. (ANL), Argonne, IL (United States)

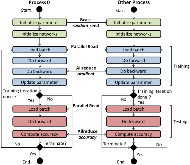

Scalable deep neural network training has been gaining prominence because of the increasing importance of deep learning in a multitude of scientific and commercial domains. Consequently, a number of researchers have investigated techniques to optimize deep learning systems. Much of the prior work has focused on runtime and algorithmic enhancements to optimize the computation and communication. Despite these enhancements, however, deep learning systems still suffer from scalability limitations, particularly with respect to data I/O. This situation is especially true for training models where the computation can be effectively parallelized, leaving I/O as the major bottleneck. In fact, our analysis shows that I/O can take up to 90% of the total training time. Thus, in this article, we first analyze LMDB, the most widely used I/O subsystem of deep learning frameworks, to understand the causes of this I/O inefficiency. Based on our analysis, we propose LMDBIO—an optimized I/O plugin for scalable deep learning. LMDBIO includes six novel optimizations that together address the various shortcomings in existing I/O for deep learning. Lastly, our experimental results show that LMDBIO significantly outperforms LMDB in all cases and improves overall application performance by up to 65-fold on a 9,216-core system.

- Research Organization:

- Argonne National Lab. (ANL), Argonne, IL (United States)

- Sponsoring Organization:

- National Science Foundation (NSF); USDOE Office of Science (SC), Advanced Scientific Computing Research (ASCR)

- Grant/Contract Number:

- AC02-06CH11357

- OSTI ID:

- 1569281

- Journal Information:

- ACM Transactions on Parallel Computing, Vol. 6, Issue 2; ISSN 2329-4949

- Publisher:

- Association for Computing MachineryCopyright Statement

- Country of Publication:

- United States

- Language:

- English

Towards Scalable Deep Learning via I/O Analysis and Optimization

|

conference | December 2017 |

NetCDF: an interface for scientific data access

|

journal | July 1990 |

Parallel I/O Optimizations for Scalable Deep Learning

|

conference | December 2017 |

A Case for Using MPI's Derived Datatypes to Improve I/O Performance

|

conference | January 1998 |

FireCaffe: Near-Linear Acceleration of Deep Neural Network Training on Compute Clusters

|

conference | June 2016 |

ImageNet Training in Minutes

|

conference | January 2018 |

Wide Residual Networks

|

conference | January 2016 |

TýrFS: Increasing Small Files Access Performance with Dynamic Metadata Replication

|

conference | May 2018 |

Entropy-Aware I/O Pipelining for Large-Scale Deep Learning on HPC Systems

|

conference | September 2018 |

ImageNet Large Scale Visual Recognition Challenge

|

journal | April 2015 |

S-Caffe: Co-designing MPI Runtimes and Caffe for Scalable Deep Learning on Modern GPU Clusters

|

conference | January 2017 |

Neurostream: Scalable and Energy Efficient Deep Learning with Smart Memory Cubes

|

journal | February 2018 |

Characterizing Deep-Learning I/O Workloads in TensorFlow

|

conference | November 2018 |

ImageNet: A large-scale hierarchical image database

|

conference | June 2009 |

Deep Residual Learning for Image Recognition

|

conference | June 2016 |

Process-in-process: techniques for practical address-space sharing

|

conference | June 2018 |

Exascale Deep Learning for Climate Analytics

|

conference | November 2018 |

Parallel netCDF: A High-Performance Scientific I/O Interface

|

conference | January 2003 |

CosmoFlow: Using Deep Learning to Learn the Universe at Scale

|

conference | November 2018 |

ImageNet Large Scale Visual Recognition Challenge

|

text | January 2015 |

| Wide Residual Networks | preprint | January 2016 |

Similar Records

An analysis of image storage systems for scalable training of deep neural networks

Data Locality Enhancement of Dynamic Simulations for Exascale Computing (Final Report)