Use of DAGMan in CRAB3 to improve the splitting of CMS user jobs

- Univ. of Notre Dame, IN (United States)

- Fermi National Accelerator Lab. (FNAL), Batavia, IL (United States)

- Istituto Nazionale di Fisica Nucleare (INFN),Trieste (Italy)

- Univ. of Nebraska, Lincoln, NE (United States)

- Research Centre for Energy, Environment and Technology (CIEMAT), Madrid (Spain)

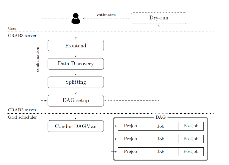

CRAB3 is a workload management tool used by CMS physicists to analyze data acquired by the Compact Muon Solenoid (CMS) detector at the CERN Large Hadron Collider (LHC). Research in high energy physics often requires the analysis of large collections of files, referred to as datasets. The task is divided into jobs that are distributed among a large collection of worker nodes throughout the Worldwide LHC Computing Grid (WLCG). Splitting a large analysis task into optimally sized jobs is critical to efficient use of distributed computing resources. Jobs that are too big will have excessive runtimes and will not distribute the work across all of the available nodes. However, splitting the project into a large number of very small jobs is also inefficient, as each job creates additional overhead which increases load on infrastructure resources. Currently this splitting is done manually, using parameters provided by the user. However the resources needed for each job are difficult to predict because of frequent variations in the performance of the user code and the content of the input dataset. As a result, dividing a task into jobs by hand is difficult and often suboptimal. In this work we present a new feature called “automatic splitting” which removes the need for users to manually specify job splitting parameters. We discuss how HTCondor DAGMan can be used to build dynamic Directed Acyclic Graphs (DAGs) to optimize the performance of large CMS analysis jobs on the Grid. We use DAGMan to dynamically generate interconnected DAGs that estimate the processing time the user code will require to analyze each event. This is used to calculate an estimate of the total processing time per job, and a set of analysis jobs are run using this estimate as a specified time limit. Some jobs may not finish within the alloted time; they are terminated at the time limit, and the unfinished data is regrouped into smaller jobs and resubmitted.

- Research Organization:

- Fermi National Accelerator Lab. (FNAL), Batavia, IL (United States)

- Sponsoring Organization:

- USDOE Office of Science (SC), High Energy Physics (HEP)

- Grant/Contract Number:

- AC02-07CH11359

- OSTI ID:

- 1420914

- Report Number(s):

- FERMILAB-CONF-16-753-CD; 1638491

- Journal Information:

- Journal of Physics. Conference Series, Vol. 898, Issue 5; Conference: 22nd International Conference on Computing in High Energy and Nuclear Physics, San Francisco, CA, 10/10-10/14/2016; ISSN 1742-6588

- Publisher:

- IOP PublishingCopyright Statement

- Country of Publication:

- United States

- Language:

- English

Similar Records

CMS distributed data analysis with CRAB3

How Much Higher Can HTCondor Fly?