Reinforcement learning based schemes to manage client activities in large distributed control systems

Abstract

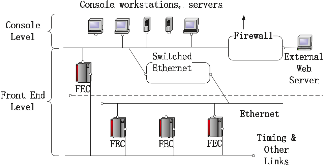

Large distributed control systems typically can be modeled by a hierarchical structure with two physical layers: console level computers (CLCs) layer and front end computers (FECs) layer. The control system of the Relativistic Heavy Ion Collider (RHIC) at Brookhaven National Laboratory (BNL) consists of more than 500 FECs, each acting as a server providing services to a large number of clients. Hence the interactions between the server and its clients become crucial to the overall system performance. There are different scenarios of the interactions. For instance, there are cases where the server has a limited processing ability and is queried by a large number of clients. Such cases can put a bottleneck in the system, as heavy traffic can slow down or even crash a system, making it momentarily unresponsive. Also, there are cases where the server has adequate ability to process all the traffic from its clients. We pursue different goals in those cases. For the first case, we would like to manage clients’ activities so that their requests are processed by the server as much as possible and the server remains operational. For the second case, we would like to explore an operation point at which the server’smore »

- Authors:

- Publication Date:

- Research Org.:

- Brookhaven National Lab. (BNL), Upton, NY (United States); Stony Brook Univ., NY (United States)

- Sponsoring Org.:

- USDOE; National Science Foundation (NSF)

- OSTI Identifier:

- 1489305

- Alternate Identifier(s):

- OSTI ID: 1491685

- Report Number(s):

- BNL-210905-2019-JAAM

Journal ID: ISSN 2469-9888; PRABCJ; 014601

- Grant/Contract Number:

- SC0012704; 1553385

- Resource Type:

- Published Article

- Journal Name:

- Physical Review Accelerators and Beams

- Additional Journal Information:

- Journal Name: Physical Review Accelerators and Beams Journal Volume: 22 Journal Issue: 1; Journal ID: ISSN 2469-9888

- Publisher:

- American Physical Society

- Country of Publication:

- United States

- Language:

- English

- Subject:

- 97 MATHEMATICS AND COMPUTING; collective behavior in networks; computational complexity; evolving networks; network formation & growth; coherent structures; collective dynamics; high dimensional systems; nonlinear time-delay systems

Citation Formats

Gao, Y., Chen, J., Robertazzi, T., and Brown, K. A. Reinforcement learning based schemes to manage client activities in large distributed control systems. United States: N. p., 2019.

Web. doi:10.1103/PhysRevAccelBeams.22.014601.

Gao, Y., Chen, J., Robertazzi, T., & Brown, K. A. Reinforcement learning based schemes to manage client activities in large distributed control systems. United States. https://doi.org/10.1103/PhysRevAccelBeams.22.014601

Gao, Y., Chen, J., Robertazzi, T., and Brown, K. A. Wed .

"Reinforcement learning based schemes to manage client activities in large distributed control systems". United States. https://doi.org/10.1103/PhysRevAccelBeams.22.014601.

@article{osti_1489305,

title = {Reinforcement learning based schemes to manage client activities in large distributed control systems},

author = {Gao, Y. and Chen, J. and Robertazzi, T. and Brown, K. A.},

abstractNote = {Large distributed control systems typically can be modeled by a hierarchical structure with two physical layers: console level computers (CLCs) layer and front end computers (FECs) layer. The control system of the Relativistic Heavy Ion Collider (RHIC) at Brookhaven National Laboratory (BNL) consists of more than 500 FECs, each acting as a server providing services to a large number of clients. Hence the interactions between the server and its clients become crucial to the overall system performance. There are different scenarios of the interactions. For instance, there are cases where the server has a limited processing ability and is queried by a large number of clients. Such cases can put a bottleneck in the system, as heavy traffic can slow down or even crash a system, making it momentarily unresponsive. Also, there are cases where the server has adequate ability to process all the traffic from its clients. We pursue different goals in those cases. For the first case, we would like to manage clients’ activities so that their requests are processed by the server as much as possible and the server remains operational. For the second case, we would like to explore an operation point at which the server’s resources get utilized efficiently. Moreover, we consider a real-world time constraint to the above case. The time constraint states that clients expect the responses from the server within a time window. In this work, we analyze those cases from a game theory perspective. We model the underlying interactions as a repeated game between clients, which is carried out in discrete time slots. For clients’ activity management, we apply a reinforcement learning procedure as a baseline to regulate clients’ behaviors. Then we propose a memory scheme to improve its performance. Next, depending on different scenarios, we design corresponding reward functions to stimulate clients in a proper way so that they can learn to optimize different goals. Through extensive simulations, we show that first, the memory structure improves the learning ability of the baseline procedure significantly. Second, by applying appropriate reward functions, clients’ activities can be effectively managed to achieve different optimization goals.},

doi = {10.1103/PhysRevAccelBeams.22.014601},

journal = {Physical Review Accelerators and Beams},

number = 1,

volume = 22,

place = {United States},

year = {Wed Jan 02 00:00:00 EST 2019},

month = {Wed Jan 02 00:00:00 EST 2019}

}

https://doi.org/10.1103/PhysRevAccelBeams.22.014601

Web of Science

Figures / Tables:

FIG. 1: RHIC system hardware architecture.

FIG. 1: RHIC system hardware architecture.

Works referenced in this record:

Multiagent learning using a variable learning rate

journal, April 2002

- Bowling, Michael; Veloso, Manuela

- Artificial Intelligence, Vol. 136, Issue 2

Learning, hypothesis testing, and Nash equilibrium

journal, October 2003

- Foster, Dean P.; Young, H. Peyton

- Games and Economic Behavior, Vol. 45, Issue 1

Conditional Universal Consistency

journal, October 1999

- Fudenberg, Drew; Levine, David K.

- Games and Economic Behavior, Vol. 29, Issue 1-2

A Simple Adaptive Procedure Leading to Correlated Equilibrium

journal, September 2000

- Hart, Sergiu; Mas-Colell, Andreu

- Econometrica, Vol. 68, Issue 5

Calibrated Learning and Correlated Equilibrium

journal, October 1997

- Foster, Dean P.; Vohra, Rakesh V.

- Games and Economic Behavior, Vol. 21, Issue 1-2

RHIC control system

journal, March 2003

- Barton, D. S.; Binello, S.; Buxton, W.

- Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, Vol. 499, Issue 2-3

Figures / Tables found in this record:

Search WorldCat to find libraries that may hold this journal

Search WorldCat to find libraries that may hold this journal